Google AI Overviews SUCKS – Here’s Why It’s The End of The Open Web

Google AI Overviews rolled out to all U.S. users in May 2024, promising faster, smarter search results by using artificial intelligence to summarize information at the top of your search page.

Sounds useful — until it starts telling people to eat rocks or glue cheese to pizza.

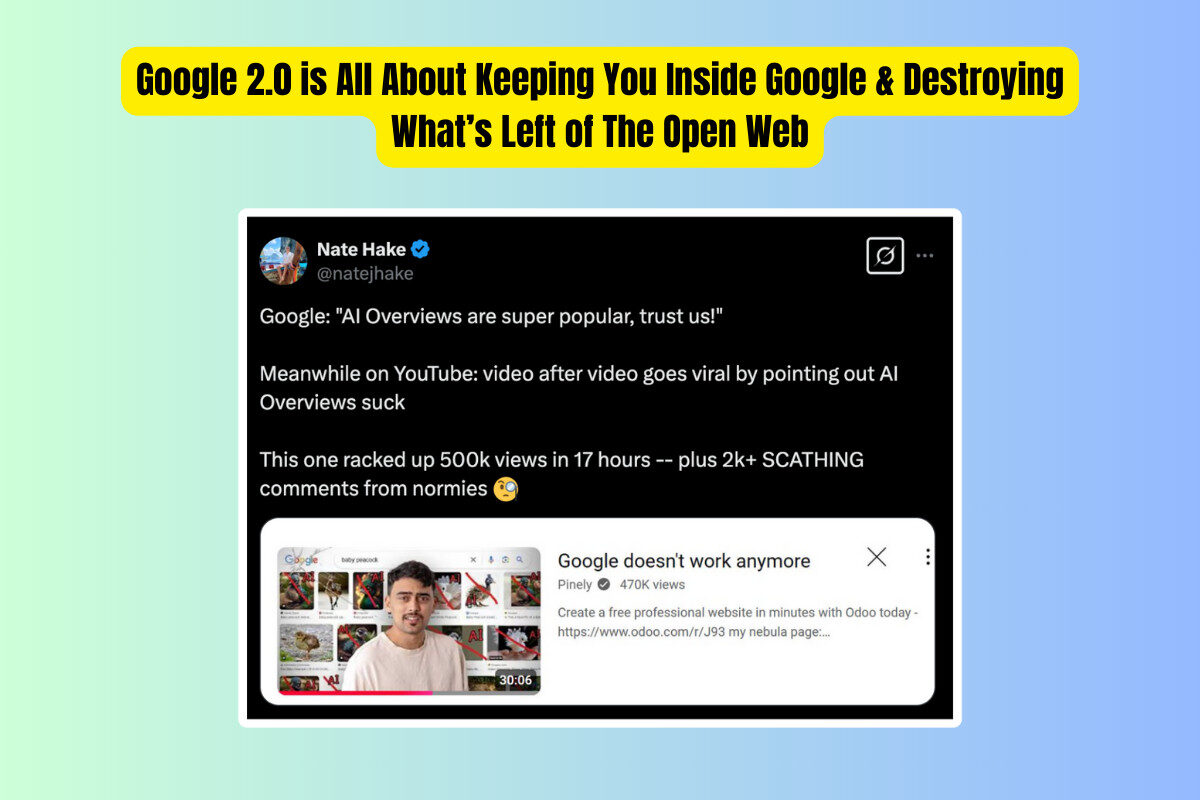

Since launch, Google AI Overviews has become one of the most controversial updates in the history of Google Search.

Critics say it’s inaccurate, untrustworthy, and potentially damaging to the entire web. Publishers are furious. Users are confused. And even AI researchers are calling it irresponsible.

If you’ve been wondering why Google AI Overviews sucks — or why it’s even a bigger deal than you might think — this guide breaks it all down.

What Is Google AI Overviews?

Google AI Overviews is a feature that uses generative AI to summarize answers directly in search results — right above the usual list of links.

It’s based on the same language models behind Google Gemini, and it’s supposed to save you time by giving you quick, direct answers to your questions.

In theory, it’s helpful. In practice, not so much.

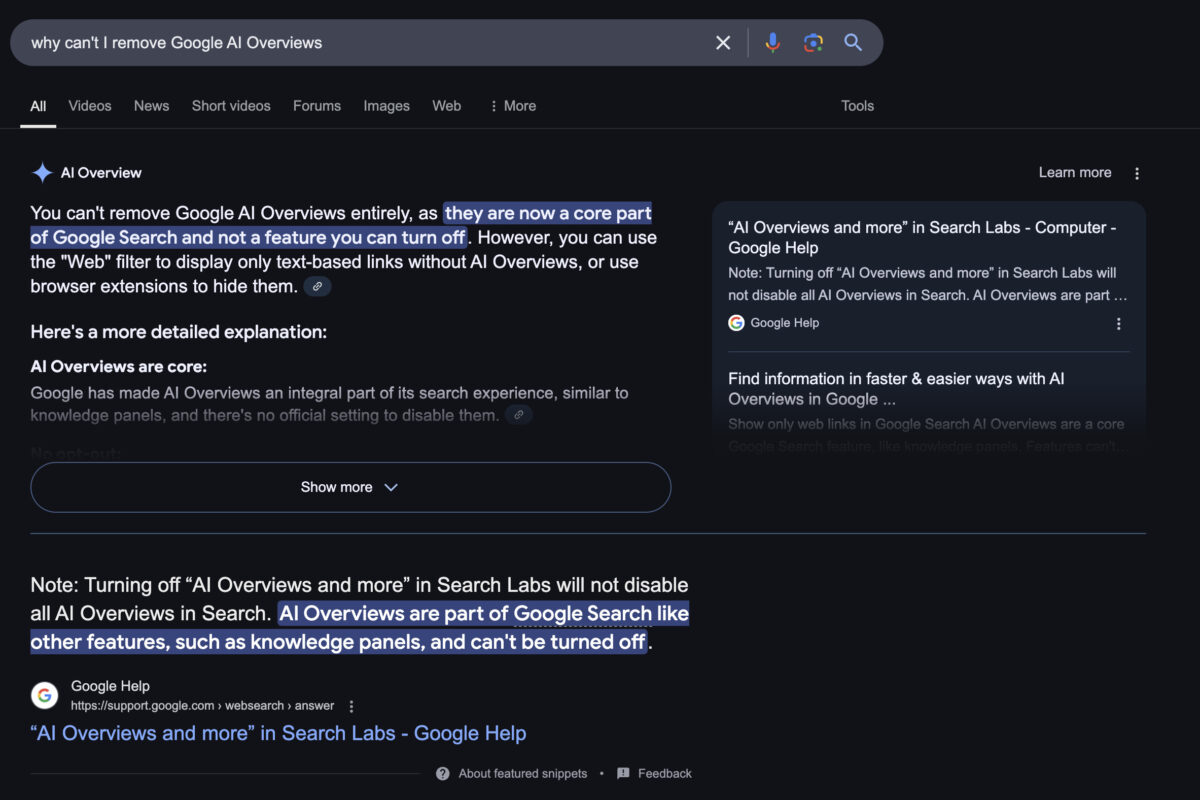

The system was first tested under the name Search Generative Experience (SGE) in Google Search Labs. But by May 2024, it became a permanent part of search for U.S. users — whether they wanted it or not.

📚 REPORT: Google AI Overviews Stats & Figures

And more is coming too – more AI Overviews and even a new AI Mode inside search. Spoiler alert: Google mentions “power users” in typical PR parlance in the statement below but does not say who they are.

As we’ve rolled out AI Overviews, we’ve heard from power users that they want AI responses for even more of their searches.

So today, we’re introducing an early experiment in Labs: AI Mode. This new Search mode expands what AI Overviews can do with more advanced reasoning, thinking and multimodal capabilities so you can get help with even your toughest questions. You can ask anything on your mind and get a helpful AI-powered response with the ability to go further with follow-up questions and helpful web links.

Using a custom version of Gemini 2.0, AI Mode is particularly helpful for questions that need further exploration, comparisons and reasoning. You can ask nuanced questions that might have previously taken multiple searches — like exploring a new concept or comparing detailed options — and get a helpful AI-powered response with links to learn more.

The Problem: It’s Often Wrong, Misleading, Or Just Plain Weird

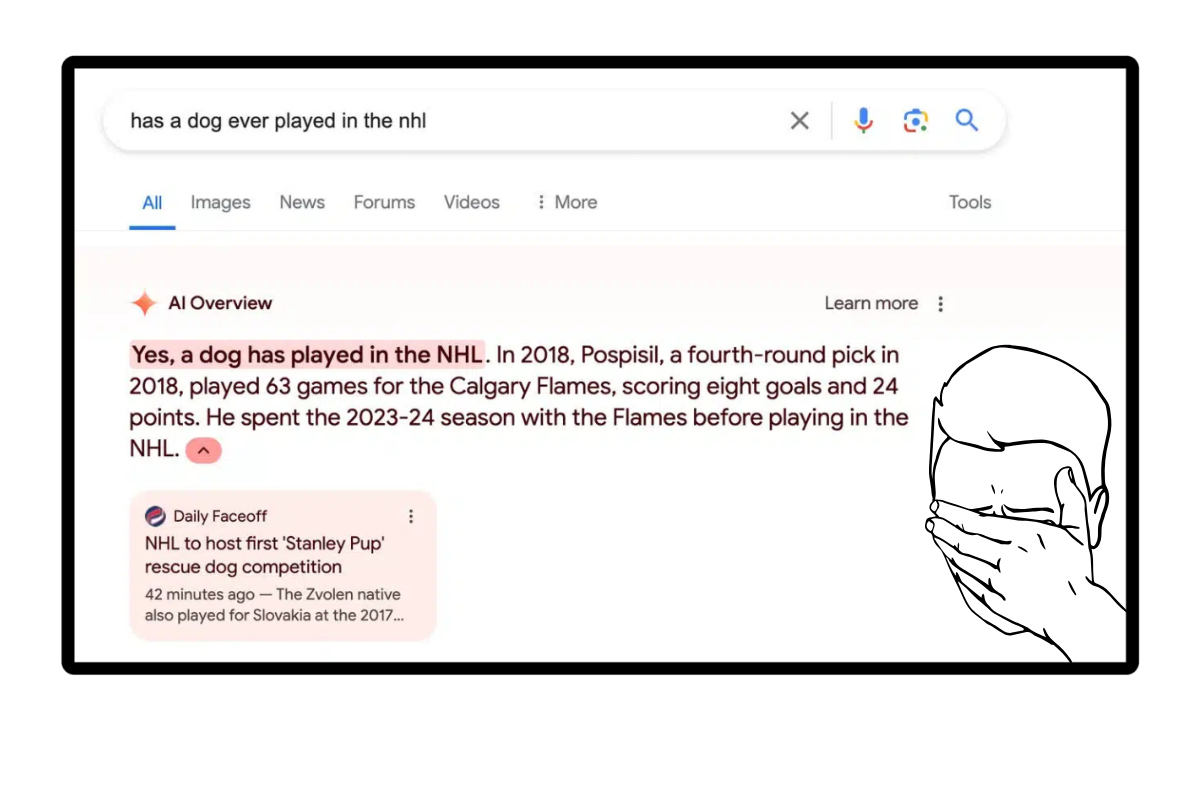

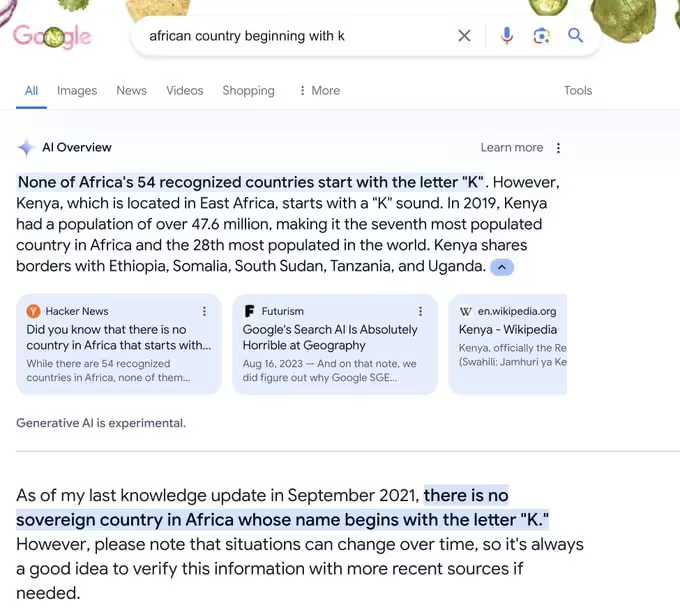

Almost immediately after launch, AI Overviews started going viral — for all the wrong reasons.

Here are just a few of the most ridiculous (and dangerous) errors it’s made:

- “Eat rocks for minerals” — Yes, it really said this.

- “Use glue to keep cheese on your pizza” — Based on a joke Reddit comment.

- Claimed Neil Armstrong stepped on the moon because of a cat.

- Stated Barack Obama was a Muslim U.S. President — citing fake academic sources.

These aren’t just bugs — they’re a glimpse into the core issue: AI doesn’t understand context, sarcasm, or truth.

It pulls content from across the web, often grabbing unreliable, satirical, or completely false information and presenting it like fact.

Even more worrying, the system tends to sound extremely confident, even when it’s completely wrong.

“This AI Overview feature is very irresponsible and should be taken offline.” — Melanie Mitchell, AI researcher

It’s Hurting Publishers — And The Web As A Whole

And here’s what makes all of this even worse: Google AI Overviews is built on content taken directly from independent publishers, blogs, and websites — without proper credit or compensation.

Google scraped this content to train its AI models, and now those models generate answers to your search queries using that same information — often without clearly linking back to the original sources.

Think about that for a second:

Google used publishers’ work to build its AI, gives them no recognition, no traffic, and no reward — and now people get the answers without ever visiting those websites.

In other words, Google is using the web’s content to replace the very people who created it.

Google says AI Overviews are meant to help users, but publishers and creators are seeing the opposite.

When answers appear directly in the search results, fewer people click through to actual websites. That means:

- Less traffic

- Less ad revenue

- Less incentive to create high-quality content

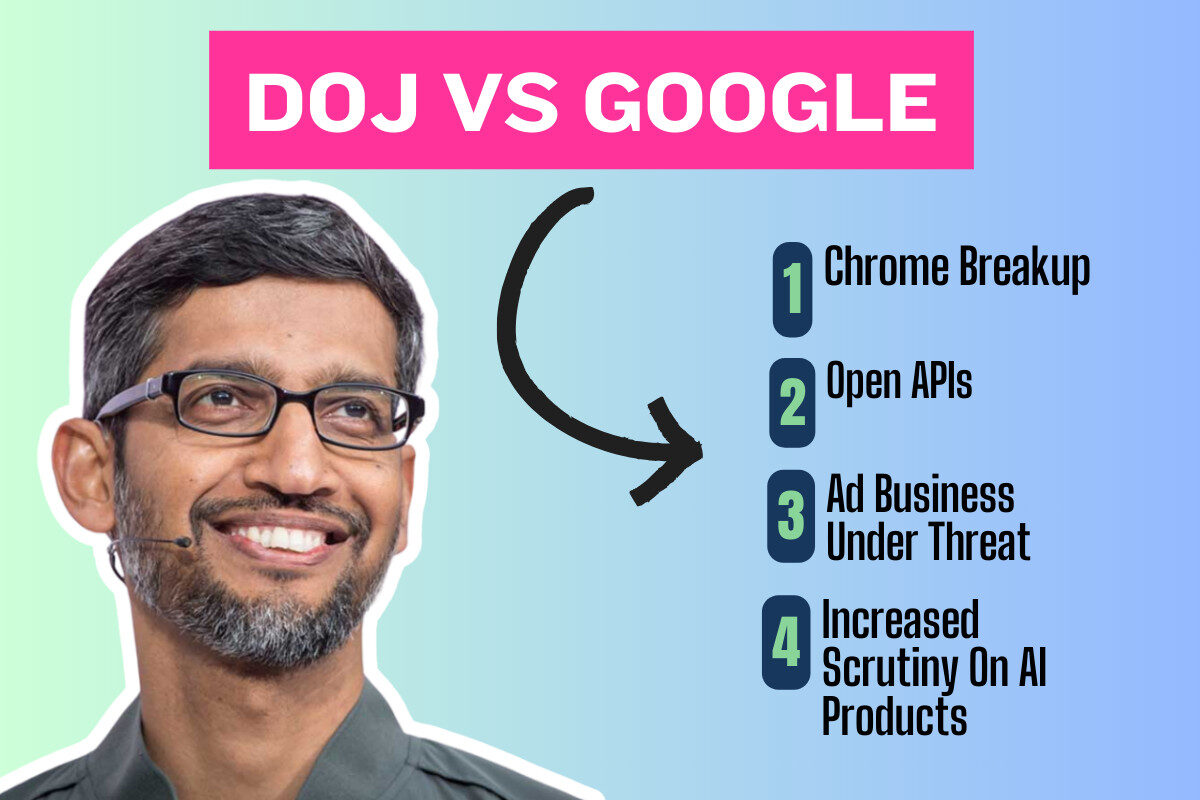

Platforms like Chegg have already filed antitrust lawsuits against Google, saying AI Overviews takes their content, gives nothing back, and destroys their business model.

And they’re not alone — this is a growing concern for anyone running a website, blog, or news outlet.

Google’s response? That AI Overviews “sends traffic to a greater diversity of sites.”

But there has been no data to back this up and there is no way to monitor it for yourself inside GA4 or Google Search Console.

But many site owners say their traffic tells a different story.

👉 Want to understand more about what’s happening to content creators? Check out our guide to how Google AI is impacting the web ecosystem.

The Tech Just Isn’t Ready

The real kicker? A lot of the information AI Overviews gives you — sometimes 60% or more — just isn’t accurate.

And that’s a big deal, because for over 20 years, Google has been the go-to source for factual information — trusted by everyone from your eccentric Aunt Maud to scientists researching cold fusion. People relied on Google search because it worked. It was consistent, dependable, and accurate.

So if Google really felt threatened by AI tools like ChatGPT, a smarter move might have been to double down on what it already does best: accuracy and trust.

It could’ve spent a few billion running ads or campaigns explaining why large language models like ChatGPT aren’t reliable for fact-based search — and why Google Search still is.

But instead, Google chased the AI hype, hoping to keep shareholders happy. Remember, Google isn’t a scrappy innovator anymore — it’s a legacy stock, and Wall Street wants to see “AI growth.” So it rushed out a half-baked, buggy product and plugged it straight into its core search engine.

The result? A tool that often gives bad answers, damages trust in Google Search, and undermines the very content it was trained on.

These systems don’t “know” anything.

They don’t verify facts.

They generate text based on patterns from the web, even when those patterns are wrong or misleading.

Researchers call this the “black box” problem — there’s no clear way to understand how the AI came up with its answer, or what sources it relied on. That makes it almost impossible to catch errors before they go live.

“To make it so it doesn’t tell you to eat rocks takes a lot of work.” — Richard Socher, AI scientist and founder of You.com

Even Google admits the tech needs more safeguards. That’s why the company has since limited AI Overviews on “high-risk” queries and made changes to avoid pulling content from Reddit and other unreliable sources.

But critics say these fixes are surface-level — and don’t solve the root problem.

And as we move through 2025, AI Overviews are showing up on more and more YMYL and medical search queries.

There Are Serious Ethical Concerns Too

Beyond the bugs and bad info, Google AI Overviews raises some important questions:

- Who decides what gets shown as “the answer”?

- What happens when biased training data leads to biased results?

- How can users trust a system they can’t see into or verify?

By placing AI-generated content at the top of search, Google becomes even more of a gatekeeper for information — deciding what users see first, how they see it, and who gets traffic.

Without transparency, oversight, or real accountability, this creates a system that’s ripe for misinformation and manipulation — even if unintentional.

Google’s Response: Damage Control, But Full Speed Ahead

After nearly 18 months of backlash, Google’s brand reputation has never been worse amongst publishers, users, and media companies.

On top of this, leaked memos from inside Google show that it has plans to completely absorb entire industries inside its new AI search engine, including everything from travel to booking a taxi.

But the company is still fully committed to AI-powered search. That means AI Overviews isn’t going anywhere anytime soon — even if many users would rather it did.

Google argues this is the future of search. But if that’s true, the future looks a lot messier than expected.