Google SGE is coming. It’s going to be problematic in more ways than one…

AI isn’t just a buzzword, it’s bloody everywhere – from your phone to the content you read and watch online. Even Apple is about to release its own form of generative AI inside iOS 18, likely using a combination of Google’s Gemini and Baidu, and I worry about this…

Big Tech’s Shiny, New Consensus Machine

Why? Because “Big Tech AI” like Google’s Gemini is nothing more than a consensus machine. It cannot – and is not – allowed to think for itself for fear of saying something wrong or politically incorrect, as evidenced by Google’s recent woke AI PR disaster.

This means information has to be “curated” in order to fit in-line with Google’s political and ethical beliefs. And that isn’t a good thing. The Pandemic showed us all too well what this looked and feels like. You’re either on the square, toeing the line or you’re nowhere.

And what even is consensus? It’s impossible to form a consensus on a macro sense that suits every possible belief system, creed, and background. You simply cannot do it. And Google is about to learn this the hard way when it switches on SGE.

SGE is Google’s Mouthpiece Which Means It’s Fundamentally Flawed

Before SGE, Google could say that it serves content and isn’t entirely responsible for what people read online, that rests with the publisher. Of course, part of the reason for its dominance is that Google has made constant strides to make search better and better, more secure.

If you search for important things like medical advice or financial stuff, nines times outta ten, you’ll only see legit sources. This is why Google’s MEDIC update had to happen. This is good, this is how search should work.

Google’s algorithm, a huge sub-topic in and of itself, is designed to filter out blatant spam and hateful content. Plenty of stuff sneaks through the gaps, most notably parasite SEO content, but, again, Google is getting better at making search fairly safe for the most part.

But with SGE, all bets are off. Google is going to become the “answer source” and this means the buck is going to stop at Google’s feet. It’ll have no one to hide behind when the lawsuits start coming in when people follow “bad advice or information” served by its AI.

Pichai is now trapped promising to restore “objectivity” to a chatbot — an impossible task based on a nonsensical premise — while Google’s reputational baggage has turned a tech demo gone wrong (Gemini is not a widely used product, unlike ChatGPT) into a massive scandal for a trillion-dollar company, across which he’s trying to roll out AI in less ridiculous and more immediately consequential roles. It’s a spectacular unforced error, a slapstick rake-in-the-face moment, and a testament to how panicked Google must be by the rise of OpenAI and the threat of AI to its search business.

NYMAG

AI Models Aren’t Accurate

If you’ve used an AI like Gemini or ChatGPT, you’ll know they’re not exactly accurate or truthful. For this reason, they have to be trained. OpenAI’s model is trained, and so too is Google’s.

This means these models are trained on a consensus deemed appropriate by Google (or OpenAI), not reality – or even the truth. This is a massive issue for SGE and AI, in general, because it is tends to create more problems than it solves.

Spam in a branded SGE result pic.twitter.com/qHQTkcS8hh

— Lily Ray 😏 (@lilyraynyc) March 26, 2024

And when there’s a trillion and one ways to take offense these days, this “issue” makes these systems fundamentally flawed. People on a macro sense are just as likely to be offended by Google’s perceived “wokeness” as they are by blatant lies and racism; they’re just two sides of the same coin.

Who Gets To Decide What The Consensus Is? Not You, That’s For Sure…

The big question here, as ever, is relates to who gets to decide on what the consensus actually is? Google? OpenAI? Meta? The government? All of them together at one of their shady getaways?

It’s an impossible task because, as thousands of years of philosophy has shown us, consensus isn’t something that can be labelled and plonked inside a product, it is a moving entity with a life force all of its own.

The antitrust problem is a vicious cycle. First, lax antitrust standards encourage corporate consolidation, a process which is hard to reverse. Then, outdated tax codes benefit tech monopolies and create marketplace inequality.

Eventually, concentrated economic power, combined with tech products’ political nature, leads big tech companies to make more aggressive moves for political power, not just selective censorship and lobbying.

Under a loose regulatory environment and a deadlocked Congress, big tech companies might be tempted to impose their own version of public policy, supplanting our elected representatives. Whatever policy agenda big tech puts forth, it won’t be representative of the general electorate.

Worse yet, since they are unaccountable to the electoral process, their actions bypass the legislative process and are harder to reverse and amend.

Stanford Review

Google is a search engine. Historically, it had one job: to help people find content online, and then cash in on the ads served while fulfilling this request.

It was a sweet deal and an even better business model: Google’s made hundreds of billions of dollars doing this.

Historically, Google’s political and corporate beliefs, for the most part, had no bearing on the answer you got when pursuing its SERPs because the answer was fulfilled by a third-party, a website (albeit, potentially, with their own set of beliefs and agendas).

Is this system perfect? No. It has been gamed by SEOs and parasite content for decades. But I’d argue it is still a better method for pure information discover than SGE which, if we’re honest, is just a fancy way for Google to plagiarise the entire web and make more money from ads.

Why is Google Hellbent on Changing Search?

Google’s always had a dualistic nature. On the one hand it is a search engine that has been used trillions of times to answer a trillion questions. On the other hand, it is an ad network. Now it wants to be the driving force of AI with Gemini and Google SGE.

And for some reason, it is now attempting to merge these three things together inside one, singular product, Google SGE.

Why? Money. The longer you stay on Google, the more money it makes from ads. And ads are Google’s biggest money maker. The more eyeballs it has inside its SERP, the more it can charge for its ad spots and, by proxy, the more money it makes for its shareholders.

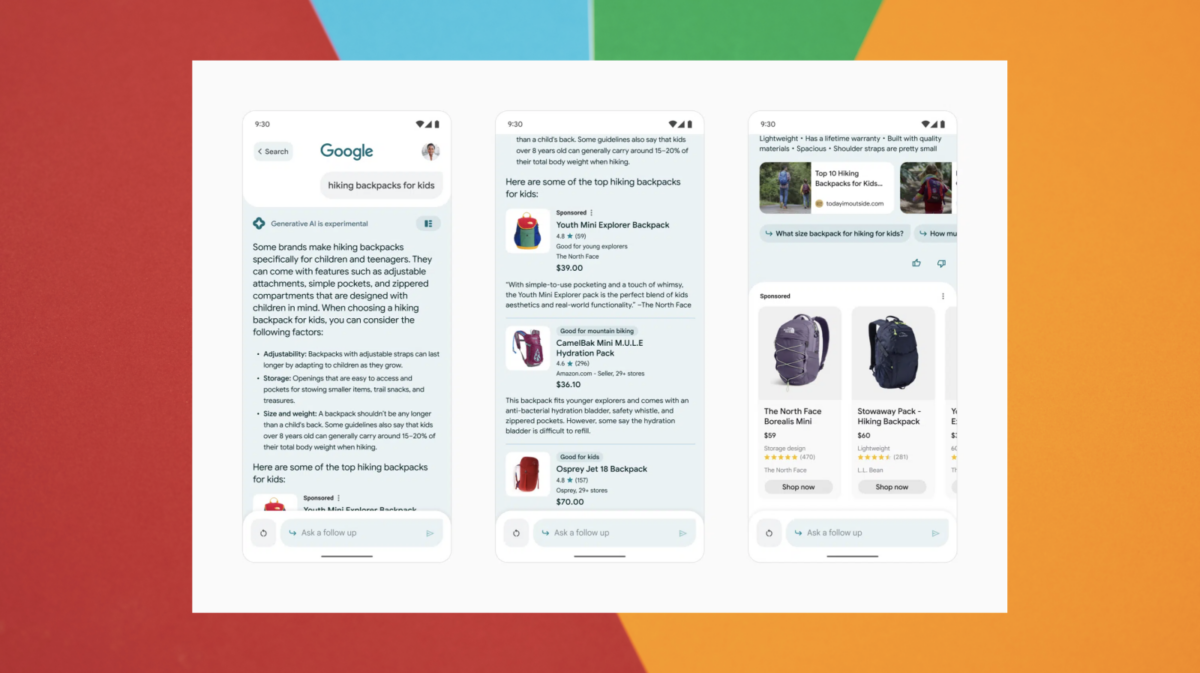

This is why zero-click searches are on the rise. Google wants it this way; it doesn’t want you leaving its ecosystem.

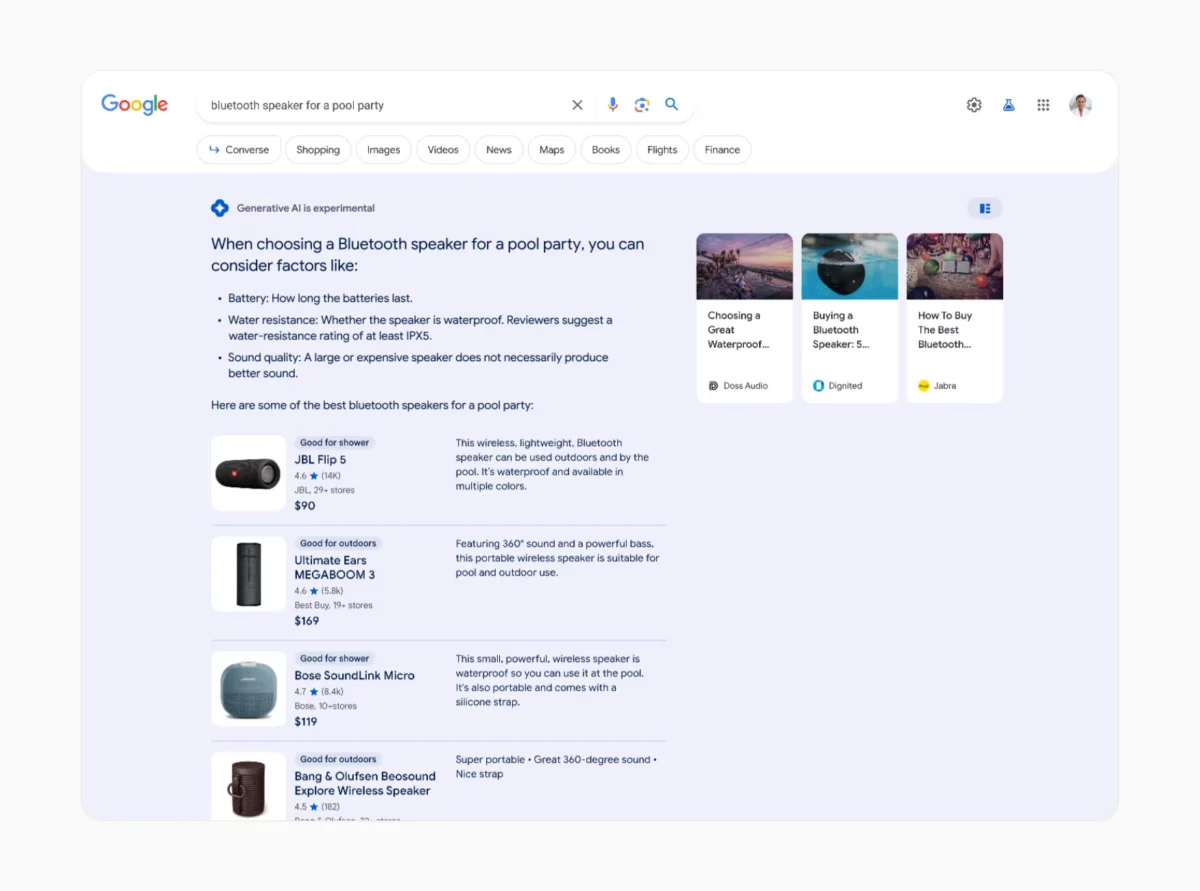

If Google can answer your question using AI (SGE), then you won’t need to go to a site to read more about it. And you’ll stay inside the SERP and look at more adverts. For basic stuff, like how much is a can of tomato soup costs in Asda, this is fine.

But for queries that require a little more nuance and explanation, do you really want a machine trained by Google giving you advice? Do you trust Google enough to do this? I don’t.

Amit Singhal, who was Head of Search at Google until 2016, has always emphasized that Google will not use artificial intelligence for ranking search results. The reason he gave was that AI algorithms work like a black box and that it is infeasible to improve them incrementally.

Then in 2016, John Giannandrea, an AI expert, took over. Since then, Google has increasingly relied on AI algorithms, which seem to work well enough for main-stream search queries. For highly specific search queries made by power users, however, these algorithms often fail to deliver useful results. My guess is that it is technically very difficult to adapt these new AI algorithms so that they also work well for that type of search queries.

While the old guard in Google’s leadership had a genuine interest in developing a technically superior product, the current leaders are primarily concerned with making money. A well-functioning ranking algorithm is only one small part of the whole. As long as the search engine works well enough for the (money-making) main-stream searches, no one in Google’s leadership perceives a problem.

Naturally, this would be a good time for a competitor to capture market share. Problem is, the infrastructure behind a search engine like Google is gigantic. A competitor would first have to cover all of the basic features that Google users are used to before they would be able to compete on better ranking algorithms.

Hacker News

Elon Musk calls Big Tech’s woke agenda a “mind virus” and, while I’m not going to open that can of worms here, he does make a very good point about the control and flow of information: who the hell are Meta, Google, and Apple to tell us what we can and cannot read or believe?

Meta has been in hot water numerous times for “curating” the news it displays on Facebook. Apple has banned apps from its store because it doesn’t approve of them, and Google is just a mess of contradictions that’d take a novel-length book to cover adequately.

The Death of The Blogger – And Maybe Big News Publishers Too

The other worrying aspect of Google SGE is that it’ll kill off tens of thousands of independent publishers, thinkers, and any form of dissent against what Google deems the consensus.

And make no mistake: it is these companies, publishers large and small, that have made Google the company it is today.

Google is a middleman, an interim between a user seeking an answer and a trusted source that can provide it. By cutting off the very hand that feeds it, Google is opening itself up to all kinds of legal action.

There are alternatives: DuckDuckGo, Brave, Bing.

But none of these get a look in when it comes to search.

Google has a monopoly of insurmountable proportions when it comes to search. And that’s before you even factor in the billions of Android phones currently in circulation.

So what happens when big name publishers like Future and Hearst and Ziff Media start seeing their organic traffic cut in half by SGE? What happens when people stop reading the New York Times because SGE is serving up snippets of the latest headlines (pulled from the New York Times)?

Simple: never-ending lawsuits.

Publishers know that Google has used their content to train its AI. Once the AI starts hurting their businesses, and it will very shortly, the lawsuits will happen, locking Google and OpenAI into a never-ending stream of on-going legal spats over IP theft and copyright infringement.

Things are about to get messy.